Tggl Proxy

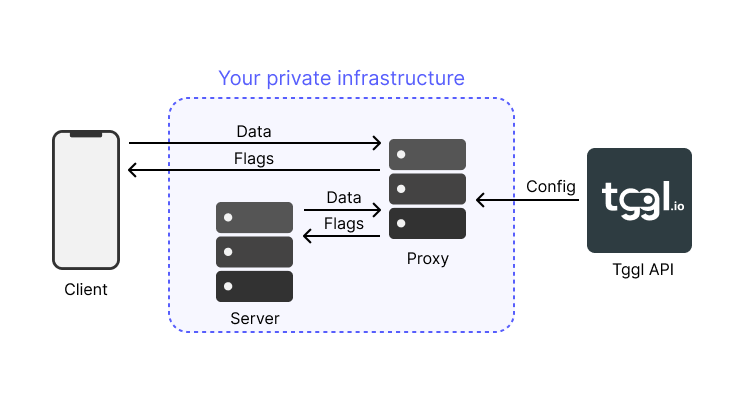

The Tggl proxy sits between the Tggl API and your application on your own infrastructure. It is a simple HTTP server that periodically syncs with the Tggl API and caches the feature flags configuration in memory. It mimics the Tggl API one for one and allows your application to query the feature flags without having to make a request to the Tggl API.

The proxy has 3 main benefits:

- Performance: The proxy can run close to your end users and handle thousands of requests per second or more depending on your infrastructure.

- Privacy: Since the proxy is running on your infrastructure, your data is never leaving your network, and never reaches the Tgl API.

- Reliability: Since the proxy does not depend on the Tggl API, it can continue to serve feature flags even if the Tggl API is down. It can even store its configuration to be persistent across restarts.

Running the proxy with Docker

Create a docker-compose.yml file with the following content:

version: '3.9'

services:

tggl:

image: tggl/tggl-proxy

environment:

TGGL_API_KEY: YOUR_SERVER_API_KEY

ports:

- '3000:3000'Now simply run:

docker-compose upThe proxy will be available on port 3000 of your machine. You can change the port by changing the ports section, for instance '4001:3000' for port 4001.

Checkout the list of environment variables below to see all the options you can pass to the proxy.

Running the proxy with Node

Install the proxy as a dependency of your project:

npm i tggl-proxyThen simply start the proxy:

import { createApp } from 'tggl-proxy'

const app = createApp({

apiKey: 'API_KEY',

})

app.listen(3000, () => {

console.log('Listening on port 3000')

})The apiKey option is the only required option, it can also be passed via the TGGL_API_KEY environment variable.

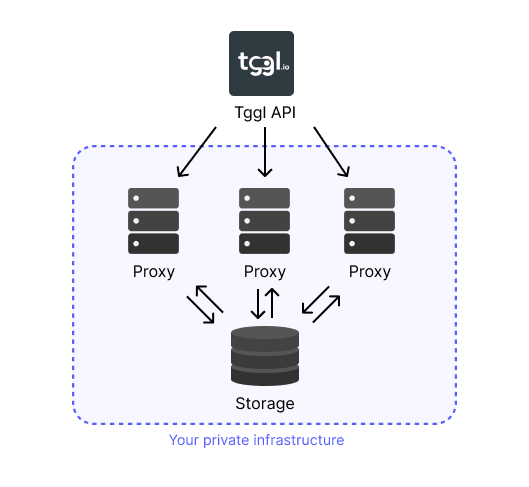

Storage

By default, the proxy does not save the configuration into a storage, but you can specify a central storage that all instances can share to improve reliability. You can even use multiple storages to have even more redundancy.

- When a proxy starts and fails to call the Tggl API, it will load the configuration from one of the storages.

- When a proxy fetches the configuration from the Tggl API, it will save it to all storages.

- If an instance looses access to the internet, it will keep polling the storages and benefit from other instances updating the configuration.

You can save the configuration to a Postgres database by setting the POSTGRES_URL environment variable. The proxy will create a table named tggl_config and store the configuration everytime it is updated.

You can also save the configuration to Redis by setting the REDIS_URL environment variable. The proxy will create a hash under the key tggl_config and store the configuration everytime it is updated.

Another alternative is to save the configuration to AWS S3 by setting the S3_ACCESS_KEY_ID, S3_BUCKET_NAME, S3_REGION, and S3_SECRET_ACCESS_KEY environment variable. The proxy will save the configuration under the key tggl_config.

If you specify multiple storages (any combination works), the proxy will save the configuration to all storages and will tolerate any of them being down.

Security

All calls to the /flags, /report, and /config endpoints must be authenticated via the X-Tggl-Api-Key header. It is up to you decide which keys are accepted via the clientApiKeys option.

You can also completely disable the X-Tggl-Api-Key header check by setting the rejectUnauthorized option to false.

Tggl is secured by default, either set rejectUnauthorized to false or provide a list of valid API keys to the clientApiKeys option. If you do not, the proxy will reject any call.

Note that if you are exposing the proxy to the outside world, you may want to limit access to /config, /health, and /metrics endpoints.

Cold start behavior

When the proxy starts, it will immediately be able to accept HTTP requests, but will only respond when the configuration is successfully fetched from the Tggl API or from the storage at least once.

The proxy will keep retrying to fetch the configuration from the Tggl API and from the storage every 500 ms until it succeeds, or until the maxStartupTime is reached. If the proxy is unable to fetch the configuration before that time, it will start responding to all requests using an empty configuration (no flags).

Whether it successfully fetches the configuration within the allowed maxStartupTime or not, the proxy will then start polling to fetch the configuration from the Tggl API and from the storage every pollingInterval ms.

API

/flags

The proxy exposes a POST/flags endpoint that mirrors exactly the Tggl API. This means that all SDKs can be configured to use the proxy instead of the Tggl API simply by providing the right URL.

This endpoint evaluates flags locally using the last successfully synced configuration.

The path of the endpoint can be configured using the path option. You can also setup custom CORS rules using the cors option.

/report

The proxy also exposes a POST/report endpoint that mirrors exactly the Tggl API. This means that all SDKs can be configured to use the proxy instead of the Tggl API simply by providing the right URL.

The proxy accumulates the received data and forwards it in a single API call to Tggl every 5 seconds, saving you a significant amount of API calls billed and bandwidth used.

The path of the endpoint can be configured using the reportPath option. It can be disabled by passing the string false. You can also setup custom CORS rules using the cors option.

/config

The proxy exposes a GET/config endpoint that mirrors exactly the Tggl API. This means that all SDKs can be configured to use the proxy instead of the Tggl API simply by providing the right URL.

This will serve the last successfully synced configuration.

The path of the endpoint can be configured using the configPath option. It can be disabled by passing the string false. You can also setup custom CORS rules using the cors option.

/health

The GET/health endpoint returns a 200 status code if the proxy is ready to serve requests. It returns a 503 status code if the proxy is unable to load the configuration or if the last successful sync is older than maxConfigAge. You can disable the stale config check by setting maxConfigAge to 0 or less.

The /health endpoint will still return a 200 status code if the proxy is unable to fetch the configuration from the API but is able to load a fresh configuration from the storage.

Even if the /health endpoint returns a 503 status code, the proxy will still serve the last known configuration correctly. If you use the health check to automatically restart the proxy, we recommend passing 0 as the maxConfigAge option to avoid restarting the proxy over and over if the Tggl API is down. Instead, you can monitor the /metrics endpoint to see if the proxy is able to fetch the configuration from the API.

The path of the endpoint can be configured using the healthCheckPath option. It can be disabled by passing the string false.

/metrics

The GET/metrics endpoint returns a Prometheus compatible metrics payload. The path of the endpoint can be configured using the metricsPath option. It can be disabled by passing the string false.

A custom gauge metric named config_age_milliseconds is exposed and represent the age of the last successful configuration sync in milliseconds. The special value 999,999,999 means that the configuration was never synced successfully via API or storage.

Configuration reference

The proxy can be configured by passing an option object, all missing options will fallback to the environment variable before falling back to their default value.

| Name | Env var | Required | Default | Description |

|---|---|---|---|---|

apiKey | TGGL_API_KEY | ✓ | Server API key from you Tggl dashboard | |

path | TGGL_PROXY_PATH | '/flags' | URL to evaluate flags | |

reportPath | TGGL_REPORT_PATH | '/report' | URL to send monitoring metrics to (pass the string false to disable) | |

configPath | TGGL_CONFIG_PATH | '/config' | URL to get the latest config (pass the string false to disable) | |

healthCheckPath | TGGL_HEALTH_CHECK_PATH | '/health' | URL to check if the server is healthy (pass the string false to disable) | |

metricsPath | TGGL_METRICS_PATH | '/metrics' | URL to fetch the server metrics (pass the string false to disable) | |

cors | null | CORS configuration | ||

url | TGGL_URL | 'https://api.tggl.io/config' | URL of the Tggl API to fetch configuration from | |

pollingInterval | TGGL_POLLING_INTERVAL | 5000 | Interval in milliseconds between two configuration updates. Pass 0 to disable polling. | |

rejectUnauthorized | TGGL_REJECT_UNAUTHORIZED | true | When true, any call with an invalid X-Tggl-Api-Key header is rejected, see clientApiKeys | |

clientApiKeys | TGGL_CLIENT_API_KEYS | [] | Use a coma separated string to pass an array of keys via the environment variable. The proxy will accept any of the given key via the X-Tggl-Api-Key header | |

storages | [] | A Storage object array that is able to store and retrieve a string to persist config between restarts | ||

POSTGRES_URL | If set, the configuration will be stored in Postgres in a table named tggl_config. | |||

REDIS_URL | If set, the configuration will be stored in Redis in a key named tggl_config. | |||

S3_BUCKET_NAME S3_REGION S3_ACCESS_KEY_ID S3_SECRET_ACCESS_KEY | If all set, the configuration will be stored in AWS S3 in a key named tggl_config. | |||

maxConfigAge | TGGL_MAX_CONFIG_AGE | 30000 | The max age in milliseconds of the last successful config sync before the /health endpoint returns a 503. If 0 or less, the health check will pass even if the config is old or was never fetched. | |

maxStartupTime | TGGL_MAX_STARTUP_TIME | 10000 | The max time in milliseconds the proxy can hold requests when starting before serving an empty config if it cannot fetch the config from the API or storage |